As of March 2024, Python is ranked in Tiobe Programming Community Index like many months before, Java is in fourth place. Haskell plays more on the sidelines and only comes in 30th place. However, our multithreading benchmark test will reveal that Haskell is unfairly neglected, at least when it comes to concurrency.

Advertisement

(Picture: Anzela Minosi )

Anzela Minosi works freelance on gigs such as building LibreOffice projects, recompiling software written with C++, Haskell or Java, and Raspberry Pi support. Examples can be found in their Github account: github.com/amxyz-cyber

The importance of multithreading

In programming, a distinction is made between parallel calculations (parallelism) and simultaneous executing tasks (concurrency or multithreading). Both techniques have different areas of application: Parallel programs achieve speed through intensive use of hardware resources, especially several CPU cores. A program reaches its goal faster by dividing the calculation into several parts, which it delegates to the individual CPU cores. Parallel programming is characterized above all by the efficiency of the calculation, which benefits sorting algorithms, for example.

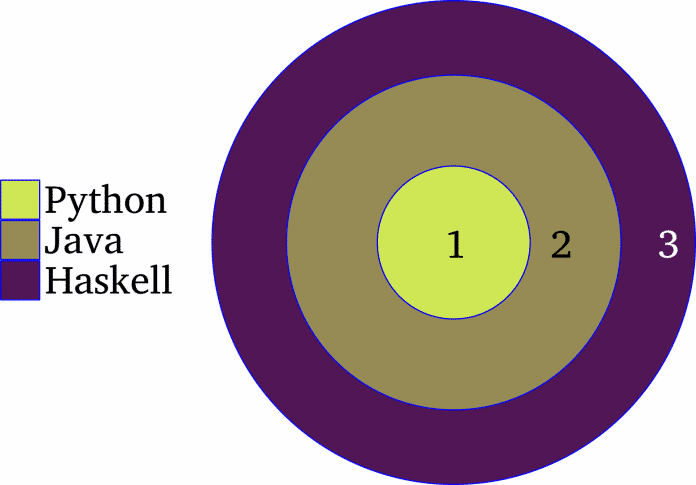

In contrast, multithreading or concurrency is a technology that is based on the use of multiple threads. Conceptually, the threads run at the same time, meaning users experience their effects overlapping. Whether they all actually run at the same time is a question of implementation. A multithreading-based program can run on a single CPU core by interleaving execution, or distributed across multiple cores or physical CPUs. Multithreading is particularly useful where the program needs to interact with several external agents that act independently of each other. For example, access by several external clients to a database server is based on multithreading. Another advantage of multithreading is the modularity of such programs. Because a thread that interacts with users is different from the one that talks to the database. Without multithreading, developers would have to write client/server applications with event loops and backreferences, for example, which is much more laborious. Implementations of concurrency, or parallelism in general, vary greatly from language to language in terms of the mechanisms used and the resulting complexity (Figure 1).

When it comes to multithreading, Haskell offers the most complexity (Fig. 1).

(Image: Anzela Minosi)

Python and the GIL thing

The one submitted in January 2023 Proposal PEP 703 to the Python Steering Council to optionally offer the Global Interpreter Lock (GIL), which makes multithreading more difficult, has met with a broad response in the Python community. And there are actually two builds with version 3.13: The standard will continue to be delivered with GIL for a few years, whereas the experimental build comes without GIL.

GIL ensures that the Python interpreter only runs one thread. This does not have a negative impact on performance in single-threaded programs, but it can turn into a bottleneck as soon as CPU-intensive calculations or multithreading play a role. The GIL exists in Python for one reason only: Python uses a reference count for all created Python objects to manage memory. This counts the pointers that point to an object. Once it reaches zero, the memory occupied by the object is released. GIL serves as an access lock to avoid race conditions where two threads modify the value of the reference count. This is how Python prevents deadlocks. GIL de facto turns any CPU-intensive program into a single-threaded program, which actually means an increase in performance for single-threaded programs since only an access lock is required.

Below we will use an example to show how multiprocessing processes can be used instead of threads to implement parallel processing of parts of a Python program despite GIL. Each process gets its own Python interpreter and storage space.

There are also other alternatives to avoid the GIL. For example, the Python standard interpreter CPython can be replaced: The nogil fork dispenses with GIL. The threading library can then be imported into your own Python module to run the program with multiple threads. However, this method also has disadvantages because nogil is based on an outdated Python version. Additionally, third-party libraries that depend on GIL cannot be easily installed or imported. And single-threaded code works even slower.

Another method is expansion modules written in C and integrated via the Foreign Function Interface (FFI). The FFI enables communication with C libraries. The code that relies on the use of multiple threads is exported as a C extension module and compiled with a C compiler such as gcc. The result is a shared C library with the extension .so. In Python, it is now possible to call a C function through multiple threads by importing the Ctypes library together with the threading library.

Multithreading in Java

The Java programming language is traditionally more multi-threaded than Python because every Java application relies on threads. Once the Java Virtual Machine (JVM) starts, it creates threads to carry out its duties – garbage collection, finalization – as well as a main thread that executes the Main method. Additionally, frameworks such as the GUI rendering libraries JavaFX or Swing make heavy use of threads to manage user interface events. There are also other classes such as the timer to create threads to execute delayed tasks. Thread safety in Java makes it impossible for an object to enter an illegal or inconsistent state, regardless of the operating system’s method calls and scheduler. Compared to Python, Java has a variety of tools that allow thread-safe implementation of multi-threaded applications. For example, variables that can be accessed by multiple threads at the same time can be defined using the keyword volatile implement thread-safe. Before a function on a with volatile If the marked variable is accessed, its value must be re-read from the main memory. Likewise, when modifying this variable, Java ensures that the value is written back to the main memory after the writing process has ended. Volatile variables usually play a role in client/server applications in order to implement run-until-shutdown patterns. In this way, a client signals a running thread to end the job it is currently processing so that the thread shuts down gracefully.

Blocks or methods, on the other hand, can be created using the keyword synchronized protect against data inconsistency. This restricts access to the code within a block or method. The cooperative mechanism behind synchronized hides, uses so-called monitors as access barriers. This is a token that every Java object has. Once a thread gains access to the block, it seizes the monitor for itself, executes the block, and releases the monitor again. The next thread that wants to gain access to the block will be blocked until the monitor holder releases the access lock.

Concurrent code execution can be implemented in Java using the Thread class, which has existed since the very first version of the compiler. In terms of execution unit, threads are lighter in weight compared to processes and can still execute arbitrary Java code. Threads are part of any process whose address space is shared among the threads. Each thread can be scheduled independently by the scheduler and has its own stack and program counter, but shares memory and objects with other threads of the same process.

In sum, Java’s thread model allows different threads in a process to easily share objects, with each thread able to modify objects it references. However, it is not always easy for developers to use access locks correctly, and the state of shared protected resources is quite at risk, even in unexpected places such as simple read access. In addition, the operating system’s scheduler decides which threads are currently running.

Haskell’s answer to multithreading

Haskell seems to be made for multithreading because its creators have rethought the usual concepts. Haskell is inherently parallel and has three forms of multithreading, which can be somewhat simplified into two categories. On the one hand, Haskell uses threads. Strictly speaking, a thread under Haskell represents an IO action that is executed independently of the other threads. On the other hand, Haskell makes use of parallelism, which is implemented differently than a thread-based program.

The most common techniques used to implement multithreading under Haskell are MVars, Channels and Software Transactional Memory (STM). In technical jargon, an MVar is a thread-safe variable that enables the exchange of information between two threads. During operation it behaves like a box for just one element and can be either full or empty. You can put something in or take something out of it. Once a thread attempts to put a value into an occupied MVar, the thread is put to sleep until another thread takes the value out. An empty MVar behaves similarly: If an attempt is made to remove something from an empty MVar, the thread has to wait until another thread places something in it.

Haskell is increasingly relying on STM, a development of MVar that provides an abstraction level for concurrency. Operations can be grouped and executed as a singular atomic operation transaction. It is significantly less problematic and has no deadlocks or race conditions. The variables under STM are of type TVar, where STM is applied to an entire block, similar to Java. One advantage that MVar has over STM is the fairness with which threads are processed. To do this, MVar applies the first-in-first-out principle, which means that threads access the MVar in turn.

In Haskell, most parallel programs are deterministic, meaning they produce reproducible results. To parallelize a function, the methods come from the module Control.Parallel for use. This avoids common multithreading problems such as deadlocks and race conditions.