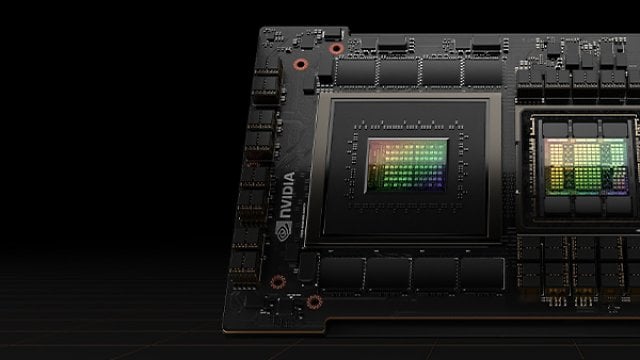

Nvidia’s Grace Hopper Superchip starts series production. A first addressee is also in the starting blocks: The DGX GH200 combines 256 chips with a computing power of one exaflop.

As announced, Nvidia presented itself on Monday at the Computex 2023 trade fair with a specially convened keynote. Of course, this also dealt with the topic of artificial intelligence, whose models should be trained more easily and quickly with the help of the DGX GH200 supercomputer shown. This should succeed with the Grace Hopper chips announced last year, which according to CEO Jensen Huang have now arrived in series production.

Nvidia: Sales outlook lets share explode – but not because of Geforce

According to the keynote presentation, the Nvidia DGX GH200 consists of 256 individual modules that, as a cluster, should achieve a computing power of one exaflop; however, Nvidia uses the H100 GPU with its 3,958 teraflops for FP8 Tensor Core to calculate the performance value – multiplied by 256, this results in the 1,013 exaflops quoted by the company. However, the top 500 list of the most powerful supercomputers is determined for FP64, where the DGX-GH200 system with 34 teraflops per Grace hopper chip “only” achieves 8,704 petaflops.

The chips are combined using a total of 36 NVLink switches; as a result, the DGX GH200 effectively behaves as a single graphics card with 144 TiB of video memory. Of course, this system has its dimensions: According to Huang, the entire system weighs around 20 tons and has around 240 kilometers of fiber optic cable, the communication between the chips themselves runs at a stable speed of up to 900 GB per second. Overall, the AI supercomputer has a bi-sectional throughput of 128 TB per second. The DGX GH200 is expected to be available later this year.

Source: Nvidia