You know it from your own photos: People who you didn’t want to photograph are often reflected in objects with shiny surfaces – often including the photographer. So far, this has mainly been annoying for the viewer. And of interest at most to artists who want to achieve pretty optical effects with it.

See more than appears visible

A team of scientists from the US universities Massachusetts Institute of Technology (MIT, Cambridge) and Rice University (Houston) now want to use the unloved reflections in a meaningful way: For image recognition systems that can capture more visual data of a room. This could make sense in the automotive sector, for example, in order to detect dangers in good time that neither the driver nor conventional cameras can recognize – be it children playing behind the next crossroads or cyclists that not even a blind spot assistant can recognize.

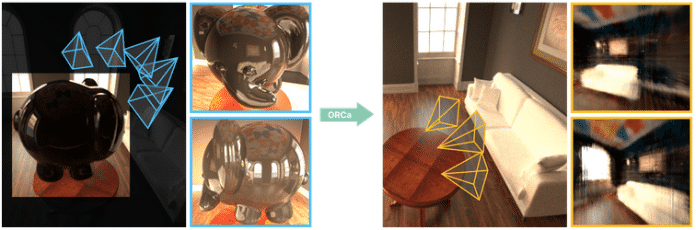

In the study entitled “Shiny Objects as Radiation Field Cameras” show Ramesh Raskar, Tzofi Klinghoffer and their colleagueshow this can work. “Reflections on shiny objects contain valuable and hidden information about the environment,” the scientists write. “By converting these objects into cameras, we can unlock exciting applications, such as imaging beyond the field of view and from seemingly impossible vantage points like the human eye.” This is made possible by computer vision algorithms, which rotate and correct the distorted, mirrored image information.

The new image recognition system captures the reflections on the elephant’s reflective surface and uses this to create an image (right image area).

(Image: MIT and Rice University)

Depth maps in 5D

In their paper, Raskar and colleagues show this using a ceramic mug or a shiny metal paperweight. This can be used to create virtual cameras from which distances can be calculated – or the overall picture. So far, the recordings are not particularly sharp and detailed, since what is missing has to be added. But it’s enough to create depth maps, which is exciting for vehicle sensors. The process is still limited to still images.

First, an object is shot from different angles, capturing multiple reflections on the shiny object. Then the system converts the researchers ORCa (“Objects as Radiance-Field Cameras”) have baptized the surfaces into a “virtual sensor”. An algorithm is used to calculate the light and reflections that hit each virtual pixel on the object’s surface. This creates an image that shows the environment from the perspective of the object. The image is also five-dimensional, as it also captures the intensity and direction of light rays hitting every point in the scene. So far it is only a proof of concept. Practical applications are still pending.

(bsc)