To summarize MWC 2024, two letters are enough: KI. Whether mobile phone antennas, communication satellites or backend software – the indication of even faster or more extensive artificial intelligence will be omnipresent in the mobile phone industry at the beginning of 2024. One approach is particularly interesting for consumers. The industry wants to bring artificial intelligence into consumers’ pockets and desks under the keyword On-Device AI (artificial intelligence in the device).

Advertisement

On-Device AI is quickly explained. AI tools like ChatGPT or Midjourney most often run in the cloud. The prompts entered by the user end up on some server via the Internet, which then sends the result back to the end device in the same way. The advantage: Since there is more performance available in the cloud than on a smartphone or PC, the result is available faster despite the detour. The disadvantages: Without an online connection, the tools are not available and sensitive data may end up somewhere on the internet. With on-device AI – according to the providers – the cloud will become superfluous. The calculations are done directly on the device, even if there is no WiFi or cellular network available. The prompts and other data do not leave the device.

On-Device AI with and without special hardware

There was disagreement at MWC 2024 about what the hardware required for this should look like. In principle, even the slowest CPU core or graphics unit of a $100 smartphone can perform AI calculations and process prompts accordingly. ARM demonstrated what this could look like. The British processor developer had the CPU calculate inputs alone. The underlying, unstated thesis: Special AI accelerators are not a must in consumer devices such as smartphones in order to use artificial intelligence “on device”. However, they can greatly increase efficiency and thereby extend battery life.

ARM’s demonstration is the opposite of what Qualcomm is aiming for. The company, which presented new wireless platforms with AI hardware and functions at MWC 2024 with the Snapdragon X80 and FastConnect 7900, sees a great need for on-device AI in a wide variety of devices and components. Large language models should run locally on smartphones and notebooks, on the MWC with seven billion prompt language models. This is supposed to be processed by special NPUs (Neural Processing Units) that are in the company’s processors.

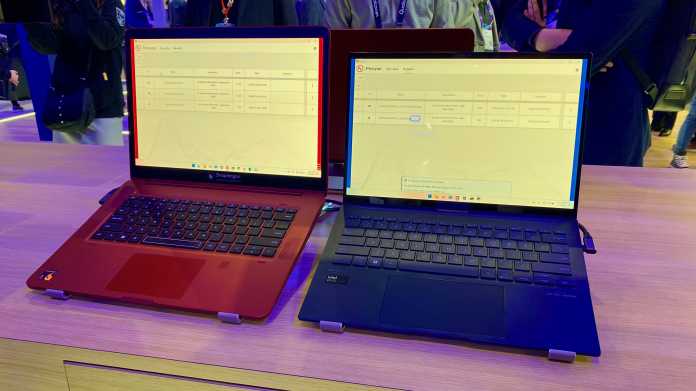

Qualcomm’s reference notebook with Snapdragon

(Image: heise online / pbe)

Qualcomm wants to be faster than the notebook competition from AMD (Ryzen 7000 / 8000) and Intel (Core Ultra 100), which integrate slower AI units. In a benchmark comparison with the Core Ultra 7 155H, Qualcomm’s upcoming Snapdragon The Intel model needed a good 22 seconds, i.e. around three times as long, including its NPU (AI model U-Net), CPU cores (text encoder) and GPU (Variational Autoencoder, VAE). Intel’s AI unit alone has less computing power: It manages around 11 trillion calculations per second (11 TOPS) in INT8 data format, the combination of CPU, GPU and NPU achieves 34 TOPS. Qualcomm mentions 45 TOPS for its NPU.

Even when asked, Qualcomm did not provide any details about the CPU configurations. In particular, the maximum permitted electrical power consumption (power limit) can influence performance and distort comparisons. The above AI comparison should therefore be understood with reservations.

Deutsche Telekom provides the answer to what consumers get from on-device AI apart from data volume savings with the AI concept smartphone, which was also presented in Barcelona. The device uses the AI interface Natural from Brain.ai, which, according to Telekom, makes apps unnecessary. Instead of installing the corresponding application for each service, as was previously the case, the user should simply tell the AI his wishes. The goal: Natural recognizes the input and the context, searches for the desired content on the Internet and then presents it in a suitable format. The necessary performance is provided by Qualcomm’s Snapdragon 8 Gen 3, which has various AI accelerators. Everything that concerns the AI should be calculated locally by the device. Personal or sensitive data only ends up in the cloud or at the retailer if it is absolutely necessary – for example for payment processing.

Telekom’s AI cell phone has a processor with special AI accelerators, but at first impression they don’t have much to do.

(Image: heise online/ dahe)

Work better with local AI

But the AI in the device should also take on tasks in the background, in the short term especially with regard to data exchange via radio. The artificial intelligence in the new Qualcomm modem and WLAN chip is intended to ensure lower energy requirements by analyzing the priorities of transmissions and adjusting the performance accordingly. That sounds good, but there is a catch: As long as the remote station – i.e. the cell phone cell or the router – does not have the same functions, the smartphone’s AI will clash with the stupid wireless infrastructure. And there is another problem: What happens when numerous end devices with intelligent WLAN control compete with each other in a network and at the same time with the “dumb” devices for priorities? The answer to this question, for example in the form of a standard, does not yet exist – at least not publicly.

Not every AI needs special accelerators

In the medium and long term, problems such as the aforementioned competition in WLAN are likely to disappear into thin air – if serious problems arise at all. What remains to be seen, however, is whether on-device AI will really play as big a role as Deutsche Telekom and Qualcomm, two of the most prominent representatives in this area, are predicting. When you look at Telekom’s concept device, at first glance the artificial intelligence does not take on any other tasks than the voice assistants from Amazon, Apple and Google on devices that are already available today. However, AI working in the device offers clear advantages when it comes to text and image generators as well as photo and video editing, for example. The special hardware designed for this purpose often works faster and more efficiently than chips without the corresponding accelerators.

Note: Qualcomm covered the author’s travel expenses to MWC.

(pbe)