In his classic novel, 1984, George Orwell imagined how Britain might one day become a totalitarian surveillance state.

Yet as Orwell’s novel celebrates its 75th anniversary this month, British police are already deploying technologies that would put Big Brother to shame.

From the facial recognition cameras watching you shop to the algorithms predicting crimes before they happen, these tools feel as if they’ve been ripped from the pages of science fiction.

But there is nothing fictional about the AI cops already patrolling Britain’s streets – and experts say there is only more to come.

Jake Hufurt, head of research and investigations at Big Brother Watch, warned MailOnline: ‘We’re sleepwalking into a high-tech police state.’

From facial recognition in your supermarket to the creepy tech predicting future crime, Britain’s police are already using AI to keep an eye on criminals across the country

Live facial recognition

Since 2015, several police forces across the UK have begun to use Live Facial Recognition to catch wanted individuals

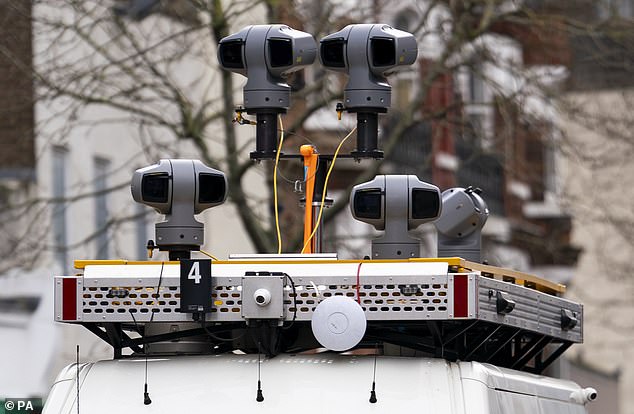

Live facial recognition, as the name suggests, allows the police to recognise wanted individuals among a large crowd in real time.

Police use a series of cameras to record the faces of anyone who passes through a set zone.

An algorithm then compares the faces of those walking in front of the camera to a ‘watchlist’ of wanted criminals and an alert is generated if the AI spots a match.

Since 2015 several police forces across the UK have begun to use this technology in public places as part of targeted crackdowns and to police busy events.

However, London’s Metropolitan Police and South Wales Police have been among the keenest adopters of the technology.

This year alone South Wales Police have already deployed eight live facial recognition zones including at a Six Nations Game and a Bruce Springsteen concert.

In total, these deployments scanned the faces of 156,032 people but only led to a single arrest being made.

Meanwhile, the Metropolitan Police have already deployed live facial recognition a staggering 73 times this year, recording 146,157 faces and leading to 209 arrests.

The police and Home Office argue that these large-scale surveillance operations are justified by their results.

For example, the Home Office notes that a wanted sex offender was sent to jail after facial recognition spotted them at the Coronation of King Charles.

A spokesperson for the National Police Chief’s Council told MailOnline: ‘Policing is in a challenging period and AI presents opportunities for forces to test new ideas, be creative and seek innovative solutions to help boost productivity and be more effective in tackling crime.’

Police deploy vans equipped with cameras in special zones, recording the faces of anyone who walks by

Recordings of an individual’s face are compared to a watch list of wanted individuals by an AI

However, civil rights groups have raised concerns over the use of live facial recognition, arguing that the technology is overly invasive.

Mr Hufurt says: ‘It is clear that some [uses of AI] including Live Facial Recognition Technology, are incompatible with a democratic society and should be banned.’

In 2020, the court of appeals found that the South Wales Police’s trial, which scanned the faces of thousands, had been done unlawfully without proper regard for privacy or data protection.

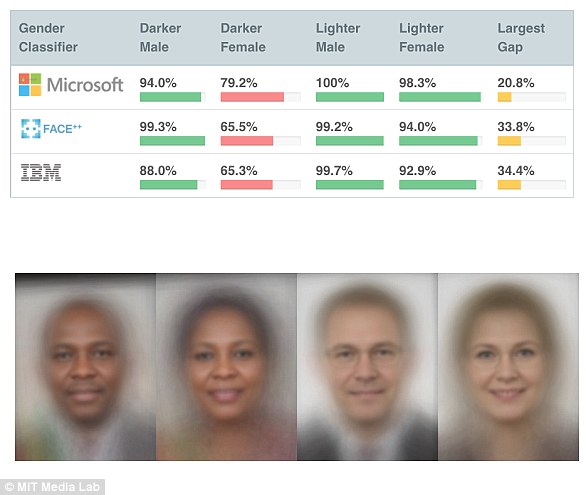

There are also concerns that facial recognition may be worse at recognising black faces, making false positives and unnecessary arrests more likely.

While the Metropolitan Police’s trials were found to have an accuracy of 90 per cent, testing conducted by the UK National Physical Laboratory (NPL) suggests bias does persist.

In the NPL’s report published in March last year, the Met’s technology was found to have a ‘statistically significant imbalance between demographics with more Black subjects having a false positive than Asian or White subjects.’

The public must be informed when Live Facial Recognition is in place but campaigners question whether this is too invasive

‘Eye in the sky’ cameras

Another way in which AI is making its way into policing is through the use of cameras which automatically detect when crimes are taking place.

Roads across the country may soon see themselves being watched over by an AI cop designed to crack down on bad drivers.

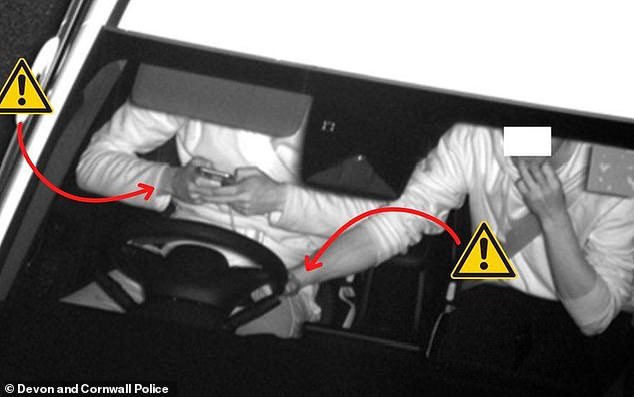

This week, Safer Roads Humber began a weeklong trial of an AI-powered mobile camera trained to spot whether drivers are on their phones or not using a seatbelt.

The camera has been designed by Australian road safety company Acusensus and is being deployed by infrastructure firm AECOM which National Highways has partnered with for the trial.

The camera is deployed from a trailer parked at the side of the road and records images of any drivers passing by.

Safer Roads Humber has begun a trial using an AI camera to spot when people are driving without a seatbelt

The images are then processed by an AI, with possible offences flagged and passed on to the police for consideration.

To avoid bias the system is ‘locked down’, meaning it cannot learn while in use.

A spokesperson for National Highways told MailOnline: ‘This ensures that the algorithms do not drift and introduce bias.

‘The system is taught to look for the presence of a phone or seat belt, any characteristics of the driver or vehicle are not relevant to the determination of phone or seat belt offence probability.’

The new AI-powered cameras caught 117 people using their mobile phones in just three days

This comes as part of a wider trial conducted by National Highways beginning this year which aims to test whether the AI cameras could make driving safer.

This current trial will run until March 2025 but the cameras have already been in use through various trials since 2021.

During one of the earliest deployments in Devon and Cornwall, almost 300 drivers were found using mobile phones or not wearing seatbelts in just three days.

And, as part of a 15-day trial in 2022, a larger vehicle-based system detected 590 seatbelt and 45 mobile phone offences.

The cameras are being deployed as part of a trial running until 2025 which will inform whether a wider rollout of AI cameras would be feasible

Facial recognition in shops

However, it isn’t just out on the streets or roads where AI will be keeping its eye on you.

Supermarkets and other shops are increasingly making use of facial recognition technology to crack down on shoplifting.

In October last year, Policing Minister Chris Philp announced a £600,000 ($752,000) partnership between police and 13 of the UK’s biggest retailers known as Project Pegasus.

Under Project Pegasus, shops like John Lewis, Tesco, and Sainsbury’s would be able to send their own CCTV footage to the police for analysis.

The police will then use AI facial recognition software to identify the shoplifters.

This type of technology is called retrospective facial recognition, and searches for suspects against a database of known individuals.

In 2023, 13 major retailers joined the police in Project Pegasus, handing over CCTV footage for the police to scan using facial recognition software

According to a study by South Wales Police, this can reduce the time taken to identify a suspect from around 14 days to a few minutes.

In the UK there is currently no specific law governing the use so it is up to the police to use common law to decide when it would be appropriate.

The Home Office maintains that the deployment must be ‘necessary, proportionate, and fair’, but there are concerns that this doesn’t provide much of a guardrail.

South Wales Police already identifies 200 suspects a month using retrospective facial recognition.

And, last year, the policing minister urged police chiefs to double the number of AI-enabled facial recognition searches to exceed 200,000.

While Mr Hufurt says Retrospective Facial Recognition can have a legitimate role in policing, he argues that better protections are needed.

He says: ‘Britain needs to have a proper conversation about the role big data and AI plays in our policing.

‘Rather than allowing the government and the police to cobble together patchwork legal justifications to experiment on the public with intrusive and Orwellian technology.’

But it isn’t even just the police using AI to watch you shopping – as stores are now increasingly installing their own AI surveillance tools.

One UK company, Facewatch, provides facial recognition for shops including Spar, Sports Direct, Budgens, and Costcutter.

Facewatch claims to identify faces of a ‘subject of interest’ as they enter the premises and send an alert to the shop owner.

You don’t need a criminal record to get on Facewatch’s watchlist, just a formal witness statement giving ‘reasonable grounds’ to suspect you of an offence.

Facewatch then holds the biometric data of those put onto its watch lists for a year after they are reported so that it search for those individuals in CCTV footage.

Many shops have also installed software from Facewatch (pictured) which scans customers faces against a watch list of suspected shoplifters

However, Facewatch has faced persistent criticism and a number of legal challenges.

In an investigation launched by the Information Commissioner’s Office, it was found that Facewatch’s system was permissible under law but that the company’s policies had breached data protection legislation on several points.

During the investigation, which concluded in 2023, the Big Brother Watch accused the Government of lobbying on behalf of Facewatch.

In January this year, Southern Co-Op was accused of using Facewatches technology to disproportionately target people in poorer areas.

Big Brother Watched has also launched legal action against the company and the Met Police after the system incorrectly identified a teenage shopper as a shoplifter.

The Southern Co-Op has been criticised for disproportionately using facial recognition in lower-income areas

Predictive policing

In the 2002 sci-fi film Minority Report, the police look into the future to predict and stop crimes before they even happen.

And while the UK police aren’t about to start pursuing people for future crime they are using AI in an eerily similar way.

Through a technology called ‘predictive policing’, cops are using AI to predict where crime is likely to happen and who is likely to commit it.

These systems take the vast amounts of data available to the police and algorithms to try and predict the risk of crime.

Avon and Somerset Police use a system called SPSS which generates a ‘risk score’ for individuals based on past data.

In the 2002 sci-fi film Minority Report, the police look into the future to stop crimes before they even happen. In the UK the police are beginning to use eerily similar predictive policing tools

Since individuals have a right to not be subject to a ‘purely automated decision’ they are keen to stress that their system does not ‘assess the likelihood for an individual to commit a crime’.

Instead, Avon and Somerset Police say that the system generates a score which is used to advise a final judgement made by a human.

However, a list of models used SPSS revealed by an FOI request clearly states that they can ‘calculate risk of committing a burglary in next 12 months.’

The models also claim to be able to predict the risk of someone committing a serious robbery, re-offending, or even becoming a victim of domestic violence.

Instead of looking at individuals other predictive policing tools focus on geographic areas.

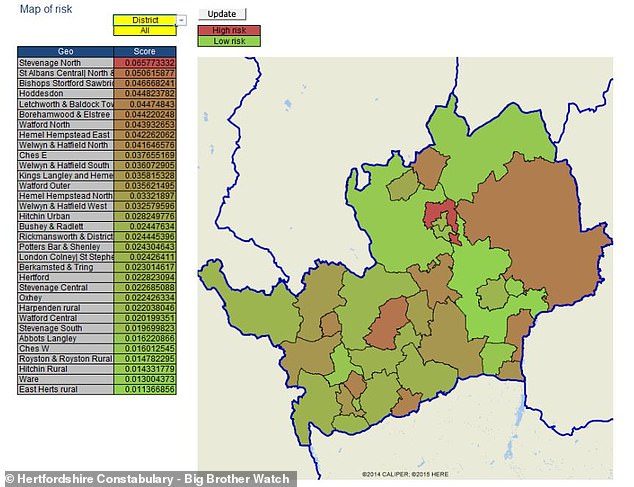

Since 2018, Hertfordshire Police and a company called Process Evolution have been developing a prediction tool named HARM.

Hertfordshire Police’s HARM tool (pictured) allows the police to predict the risk of crime area by area using factors like unemployment and previous incidents of antisocial behaviour

This tool lets police generate a risk score for areas as small with populations as small as 1,000 to 3,000 people using data such as unemployment, deprivation, antisocial behaviour rates, and previous crimes.

As before, the police argue that these tools help to use resources more efficiently and help lower crime rates.

The National Police Chiefs’ Council told MailOnline that ‘AI can make us more effective, efficient, and productive’ across the police’s entire remit.

However predictive tools have come under criticism for potentially entrenching the biases recorded in previous police data.

For instance, in 2018 Durham Constabulary was accused of using ‘crude profiling’ of ethnicity and credit reports to assess individuals’ risk of re-offending.

Researchers also found that the tool was ‘deliberately overestimating the risk of individual offenders’ in order to reduce high-risk errors.

The police use predictive tools to use their resources more efficiently, but campaigners warn they risk introducing over-policing and bias

Monitoring football stadiums

One of the areas where AI policing has become increasingly common is on the football ground.

But this isn’t just a some fancy new VAR, as the police are bringing AI facial recognition to bear at some of the biggest events.

Police forces use the technology to pick out individuals who may be wanted for antisocial behaviour or other crimes.

With thousands of fans turning up and only limited numbers of police, this opens up possibilities to catch those who may otherwise slip by.

In 2023 the Met used Life Facial Recognition at a premier league game for the first time ever to scan the faces of thousands of fans on their way to watch Arsenal v Tottenham.

In 2023 and 2024 the police used Live Facial Recognition at White Hart Lane during the North London Derby. In 2023, three arrests were made after cameras scanned the faces of thousands of fans

Three people arrested included a man wanted on recall for prison for sexual offences, and another who was charged with being in breach of a football banning order.

This April, the Met once again warned that it would deploy Live Facial Recognition ‘in the vicinity of White Hart Lane’ ahead of the North London Derby.

Likewise, the Met’s records reveal that the technology was deployed twice in one day at Crystal Palace’s Selhurst Park during a match against Brighton.

Despite scanning the faces of over 4,000 fans over three and a half hours, no arrests were made.

And, with records showing widespread use at concerts and other sporting events, it seems that facial recognition in stadiums is likely to become much more common.