Professor Stuart J Russell

“We can’t afford an AI Chernobyl”: Expert warns in drastic words against unrestrained AI development

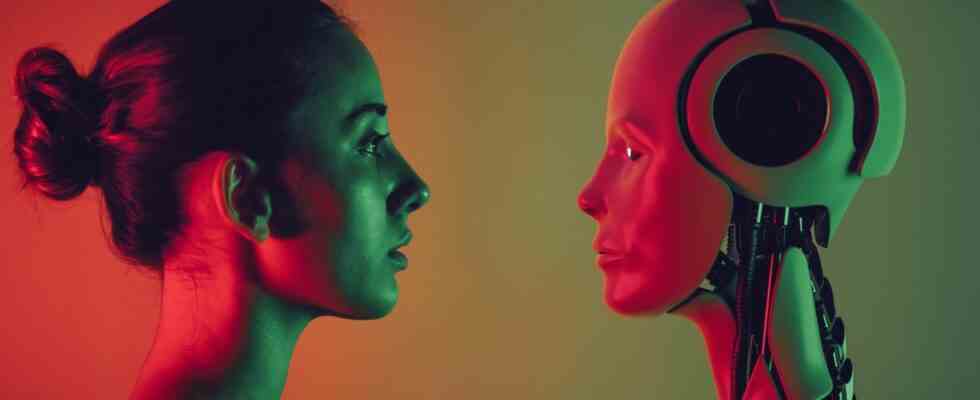

Artificial intelligence becomes a conversation partner with programs such as ChatGPT (icon image)

© imaginima / Getty Images

In an open letter, experts call for AI to be limited before we use it unhindered. The undersigned Stuart J. Russell has now found drastic words for his fears.

Since the end of last year, artificial intelligence has suddenly been on everyone’s lips. Thanks to programs like ChatGPT or image generators like Midjourney, the technology has reached the mainstream. In an open letter, a group of scientists, experts and tech giants has now called for the development to be stopped. Signer Stuart J. Russell now goes even further in an interview. And warns of the impending consequences of the technology.

“It’s a recipe for disaster,” Russell believes. He has been dealing with artificial intelligence for decades, is a professor at the University of Berkeley and author of the standard work “Artificial Intelligence: A Modern Approach”. The current surge in unregulated AI is a crossroads for the industry and humanity, he warns in an interview. “We can’t afford Chernobyl for AI.”

fear of disaster

He does not choose the comparison by chance: For him, the current situation is comparable to the construction of a nuclear power plant. “If I wanted to build a nuclear power plant, the government would ask me to prove that it’s safe, earthquake-proof, not exploding,” he says. “If I couldn’t do that, they wouldn’t say: then not, just start, it’s okay.” For this reason, in his view, the industry should also be set limits by the government – albeit in cooperation.

A major problem with the current development is that we simply do not know exactly how the technology works, explains Russell. Artificial intelligence relies on training AI models on sets of data and then trying to steer them in a certain direction. “We know how we got there that programs like ChatGPT can produce a mathematical proof in the style of a Shakespearean poem. But we have no idea how they actually do it in the end. We just don’t know.” That would also make it more difficult to avoid misconduct, he explains. “It’s like scolding a dog. And just keep telling him he’s a bad dog when he does something wrong. Hopefully he learns from that.”

Artificial intelligence

Where should the next trip go? According to ChatGPT, these are the ten best holiday countries

hard demand

The open letter published at the end of March, which Russell also signed as well as tech billionaire Elon Musk and Apple founder Steve Wozniak, therefore calls for a development stop. But that’s not enough for Russell. “In my view, the six months required there are not sufficient,” the professor clarifies. In his opinion, a type of guideline for safe use would first have to be defined for each type of AI – and only after proof of compliance could companies then publish their programs. “If these guidelines can’t be defined, if you can’t prove that they are being complied with – then that would just be bad luck”; he defiantly gets to the point.

However, it is important to the expert that his demand is not understood as a rejection of AI. “I’ve been researching artificial intelligence for 45 years, I love it. I believe its potential to positively change the world is limitless,” he clarifies. “But we don’t want to see a Chernobyl for AI that has really serious consequences.” What the consequences could look like is simply not known. Russell therefore has a clear demand: “We have to grow up and finally take the possibility of serious consequences seriously.”

Source:BusinessToday