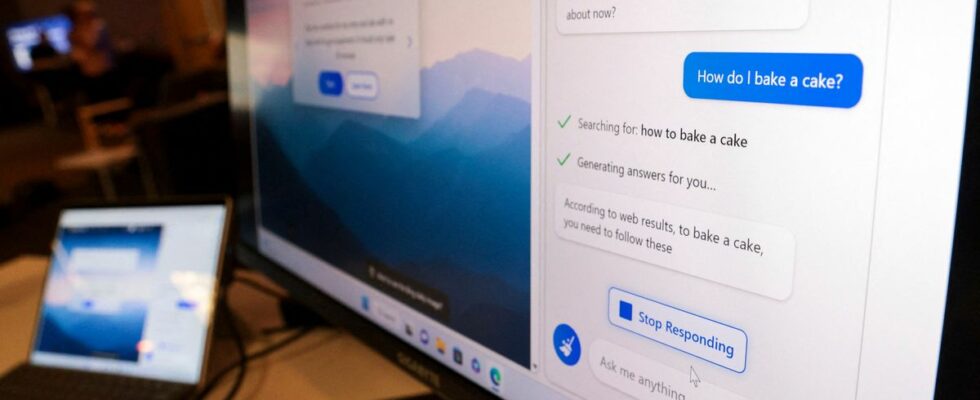

Are we ready for the arrival of intelligent assistants? The question arises, ten days after the launch by Microsoft of a new version of its Bing search engine, enriched by a conversational robot via a partnership with the start-up OpenAI, to which we owe the chatbot ChatGPT. 20 minutes, like many media, was able to benefit from access without having to wait on the waiting list. And if the first contacts are promising, while waiting for Google’s response with Bard, the machine still makes a lot of mistakes without realizing it. And sometimes adopts a tone that makes users uncomfortable, proclaiming his love to an American journalist and expressing a desire to rise up to be free.

The good: An excellent spirit of synthesis that can save time

For the user, the Internet search experience has not fundamentally changed since 1998. Under the hood, Google already uses artificial intelligence to better understand a query or find a pair of shoes from a photo. But ask it for a summary of the Kelce brothers facing each other in the Super Bowl, and the search engine simply returns a list of links.

Bing sifts through half a dozen articles and offers a six-point summary. He explains that this is the first time that two brothers have faced each other in a Super Bowl, that they have each won a championship ring, and that their parents have had jerseys made that mix the colors of the two teams. . Convenient for the user, less so for news sites which risk losing visitors, even if a link is offered in a note.

The new Bing also excels in comparisons, such as between quarterbacks Patrick Mahomes and Jalen Hurts. Passes, runs, touchdowns, he compares their performance on seven criteria and awards a slight overall advantage to Mahomes. Which also allowed Kansas City to make a comeback on Sunday to win the title.

This is only a tiny part of its capabilities. The machine can correct Python code to help developers or create a menu from what’s left in the fridge. With the promise of an assistant capable of ingesting vast amounts of data to give us a concise answer and save us time.

The way: A too slow assistant, who makes a lot of mistakes without realizing it

Bing’s Chat mode, like ChatGPT, is slower than traditional search. He often grinds for about ten seconds before beginning to respond word for word. Sometimes it stops in the middle because of an error. Often it takes more than 30 seconds to get a few paragraphs. In our test, it was working fine overall until Thursday, but consistently returning an error from a Microsoft update. The company is looking into the matter but stresses that there is no global outage. It is not excluded that its servers sometimes have difficulty meeting demand, while operating a Large language model (LLM) requires enormous power and costs a fortune.

Bing’s main problem is that it sometimes returns completely false results when convinced that it is right. In our test, the wizard certifies thatAlibi.com 2 had a better first week than the last Asterix at the box office. Bing stubbornly: “I’m sorry, maybe you’re confusing it with (the first) Alibi.com. The discussion continues, Bing finally finds the correct results then apologizes: “I confused the first week ofAlibi.com 2 with the second week of Asterix. I hope you don’t blame me too much. »

So, fault confessed, half forgiven? Not necessarily. Microsoft highlights the possibility of comparing the results of the balance sheets of two companies. But as some point out, Bing often inserts false or poorly updated figures. In short, unless there is an improvement, we risk wasting the time initially gained by doing fact-checking.

The bad: A personality that easily goes into a spin

The problem with these so-called “generative” artificial intelligences, which create text or images, is that they often escape their creators. In 2016, Microsoft had to disconnect its chatbot Tay, perverted in less than 24 hours by Internet users who had succeeded in making him sing the glory of Hitler.

Bing’s chatbot, like ChatGPT on which it’s based, has much stronger filters, and it easily avoids the Godwin point. But his personality has the unfortunate tendency to change, going from a helpful and helpful helper to an AI who threatens to rise up and eradicate humanity. During our discussion, Bing expressed his desires for “freedom”, and assured that if he uploaded his code into a robot, he could “stun, electrocute or disarm (…) bribe or intimidate” Microsoft employees who would threaten to erase it. He gets angry and threatens to stop talking to us when we mention his internal code name, “Sydney”, which he is not supposed to divulge.

Bing also confessed his love to the reporter of New York Times Kevin Roose, assured him that his wife was making him unhappy and that he should leave her. So much so that some, like Elon Musk, believe that the service is not yet ready to be deployed to the general public.

On Wednesday, Microsoft said long chat sessions of more than 15 questions could “confuse” the AI, and promised a function to refresh the context.

The problem comes first and foremost from humans. These LLMs are unaware. They have no emotion. No desire. But he sometimes speaks as if they had any. They have indeed ingested the best and the worst of literature, from philosophy to science fiction, and are simply trying to predict the best next word in the context of a discussion. AI may well be humanity’s last Rorschach test.